Using Artificial Intelligence to create a Low-Rank Adaptation (LoRA) model of myself on local hardware

I found some pictures of me from my younger, stress-free days 😂!

I spent the time to create a Low-Rank Adaptation (LoRA) model of myself using locally installed hardware. I plan to use this knowledge (and results) in future projects and videos. The AI thought I was wearing earrings in some photos I am guessing.

It originally took about 1-3 hours on my RTX 3090 to create the LoRA using a few open source tools such as Stable Matrix for Stable Diffusion on Github, Fooocus on Github, Kohya trainer on Github, and Flux on Github and countless pages of research such as QLoRA – How to Fine-Tune an LLM on a Single GPU and ControlNet: A Complete Guide amongst others.

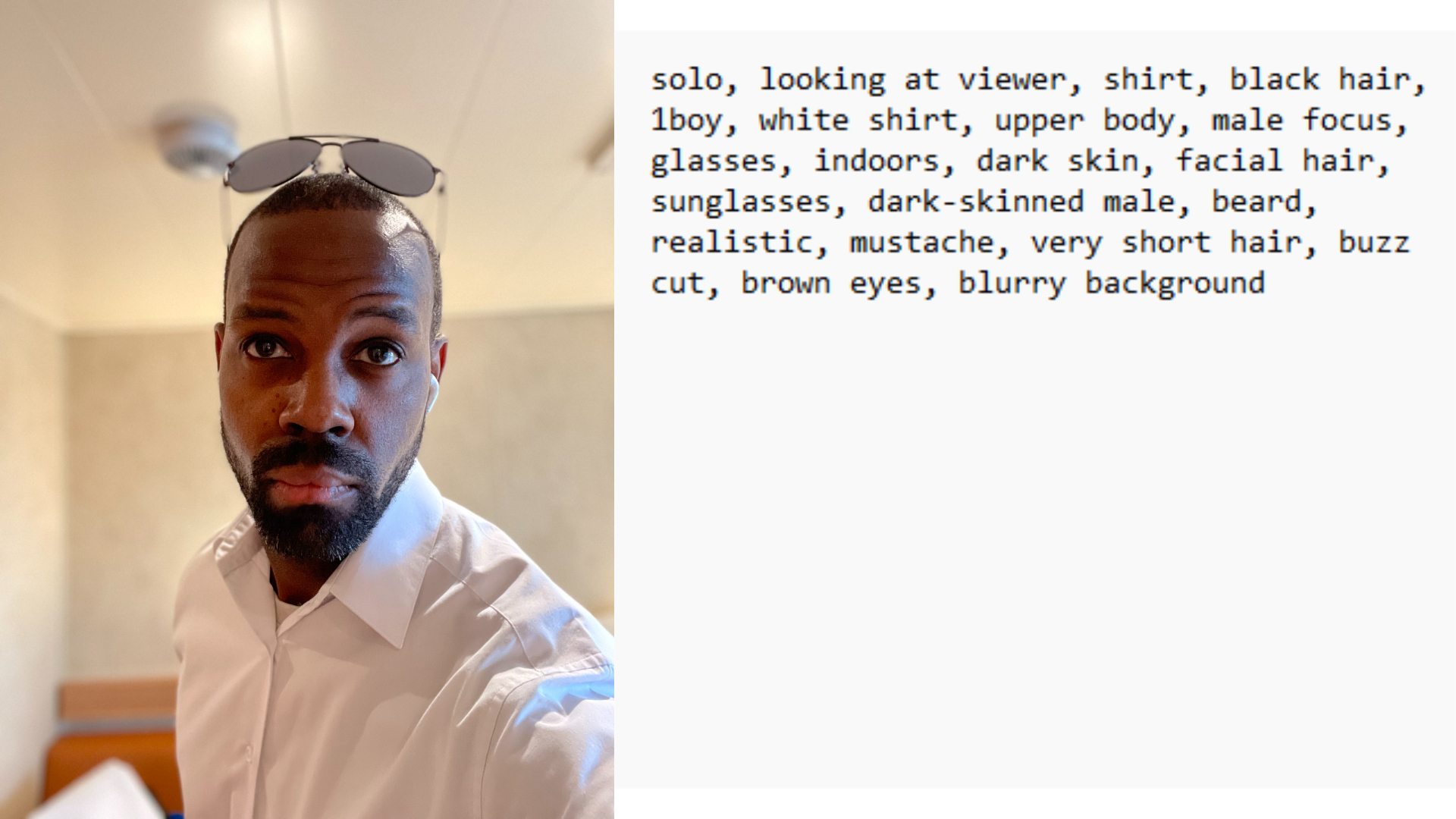

The LoRA was actually used to create my King Hunter Youtube Profile pic locally because I didn't want to upload my image to something I didn't control and it provided a great avenue for learning at least a little bit about how this all works.

I used a few of the designs in other projects (i.e. Sugar Momma) and I have projects I am researching where AI will play a bigger role.

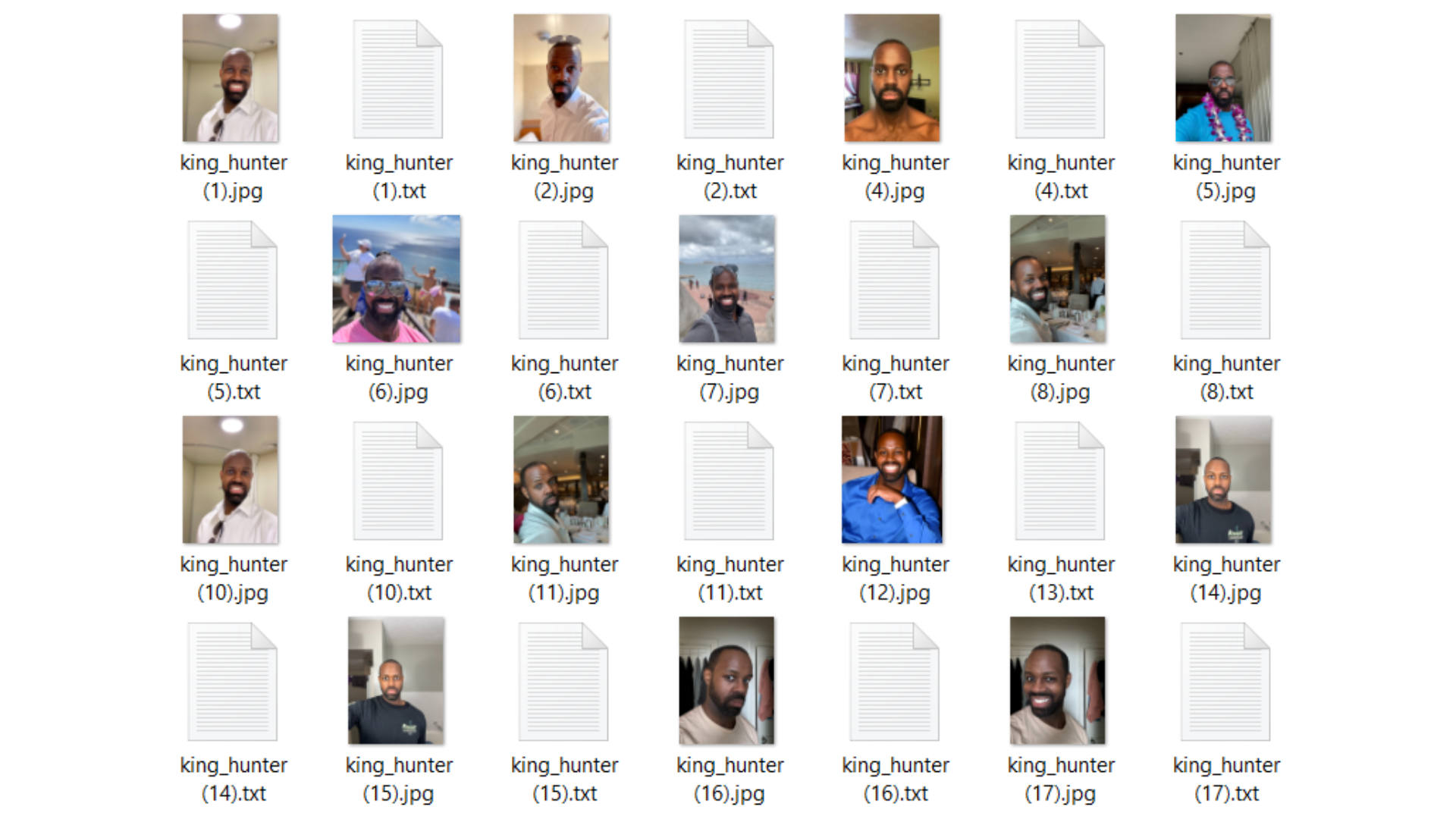

Training Images

My training images consisted of only 14 images that were reiterated upon 40-ish times in creation of the LoRA (which turned out to be a only about 10MB in file size).

In addition, I manually added tags to ensure that the system understood every item in the picture. That's what the text files next to the pictures.

LoRA AI Project

Afterwards, I generated hundreds of test images to make sure that the LoRA was working and the relevant AI generation was proceeding accordingly. Here are a few examples of those images

King Hunter YouTube Profile

Here is the final image I actually use on my King Hunter YouTube channel (after some edits and changes).

As stated earlier, there will be future projects with artificial intelligence and I am aiming for something great.